ScienceLogic has updated a PowerPack module for monitoring graphical processor units (GPUs) in its SL1 artificial intelligence for IT operations (AIOps) platform to add a set of event policies that trigger alerts whenever, for example, certain heating or performance thresholds are surpassed.

At the same time, ScienceLogic has also added an ability for the platform to automatically flag issues such as storage exhaustion or network oversubscription before they escalate, along with an update to its intelligent Device Investigator tool that can now prioritize scoring of outliers discovered in the time series data collected.

ScienceLogic has also added Apache SuperSet support to provide an additional dashboard option along with an ability to use the Open Database Connectivity (ODBC) protocol to export data to Tableau and Microsoft Power business intelligence (BI) applications.

Finally, a Dynamic Application Builder tool for building custom monitoring tools has been updated to provide access to a low-code wizard to configure HTTP/SSH credentials, pull application programming interface (API) or command line interface (CLI) payloads, and export directly to SL1. It also supports rapid creation of PowerPack modules via snippet arguments, JC parsers, and custom headers.

Additional planned enhancements for the Dynamic Application Builder include API testing, basic authentication via support for the OAuth2 protocol, integration with the “Low-Code Tools” PowerPack for bulk buildouts of IT services, and stronger SSL verification and authentication handling.

Michael Nappi, chief product officer at ScienceLogic, said additionally ScienceLogic plans to build out its own application performance management (APM) as part of a larger effort to enable IT teams to unify the management of applications and the IT infrastructure they run on.

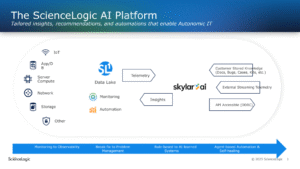

At the core of the ScienceLogic platform is Skylar Analytics, an AIOps engine that combines machine learning algorithms with advanced analytics to surface trends and predict likely events. That capability is now being made further accessible via usage of multiple large language models (LLMs) to both provide a natural language interface and access to various knowledge bases to the data lake that drives the core AIOps platform, said Nappi.

None of these capabilities are likely to replace the need for IT professionals but they do promise to allow IT teams to provide more self-service capabilities using a set of transparent AI capabilities, he added. If IT teams are unable to see how an AI platform actually accomplished a task it’s not likely they are going to trust any of the output generated, noted Nappi.

It’s not clear to what degree IT teams are relying on AI to manage IT operations but as the number of organizations using AI coding tools to build more applications than ever continues to increase, the issue may soon be forced. There is, of course, already no shortage of AIOps platforms to choose from but the one thing that is certain is that as IT environments become more complex whichever AIOps platform an IT team opts to standardize on should be carefully evaluated but it’s not going to be easily replaced once it’s been deployed.