For years, data center operators have wrestled with the fact that most downtime isn’t caused by catastrophic hardware failure. Instead, it’s the cumulative effect of missed alarms, delayed reactions, and human error during day-to-day operations. The Nokia Bell Labs Consulting data center fabric reliability study redefines what operational reliability looks like. It quantifies the impact of moving from a legacy Present Mode of Operation (PMO) to a modern Future Mode of Operation (FMO) powered by Nokia SR Linux and Event-Driven Automation (EDA). The study found that organizations adopting the combined solution achieved up to 23.9 times less downtime, which is an improvement of roughly 96% compared with conventional solutions.

From Passive Monitoring to Predictive Action

Traditional network monitoring is reactive by design. Engineers sift through dashboards, graphs, and log files, correlate syslogs, and manually push configuration fixes when congestion or packet drops happen. To put it succinctly, this model doesn’t scale. SR Linux and EDA introduces an intelligent, event-driven system in which telemetry, analytics, and automated workflows work together to detect and resolve problems in real time.

At the heart of this shift is the Event Handling System (EHS) within SR Linux. The EHS can pre-program actions that respond instantly to network anomalies such as port saturation or packet loss. When a threshold is reached, for example, a switch port crosses 90% utilization or packet drops begin to spike, the system automatically triggers an EDA workflow that might push a routing-policy update, rebalance traffic, or disable a failing interface before the degradation cascades. Instead of waiting for an operator to notice and react, the network itself executes the fix.

Real-World Impact in Operations

The operational modeling performed by Bell Labs shows that these event-driven capabilities directly reduce mean time to restore and common-cause failure probabilities across the data-center network. In practical terms, that means fewer service tickets, fewer maintenance escalations, and a drastic reduction in “human-in-the-loop” errors.

Consider a financial services provider running low-latency trading workloads. During peak market hours, a burst of east-west traffic saturates multiple leaf ports. In a legacy setup, network operators might not identify the root cause for several minutes, resulting in packet loss and delayed transactions. With SR Linux and EDA, telemetry from those ports immediately triggers the EHS, which pushes a temporary routing-policy adjustment to redistribute flows within seconds. The trading systems remain unaffected, and no revenue is lost.

Continuous Feedback and Autonomous Recovery

EDA extends SR Linux monitoring into a closed-loop automation framework. It continuously collects telemetry from network devices and relevant services, correlating events across the network to detect patterns that precede outages. Some examples could be incremental latency increases or asymmetric traffic flows. When these kinds of conditions are identified, EDA can execute programmatic remediation actions automatically, whether that means re-provisioning a link group, adjusting QoS parameters, or initiating a rollback.

This kind of proactive correction transforms monitoring from a diagnostic function into a self-healing mechanism. Over time, each event contributes to a more complete understanding of network behavior, improving the accuracy of future responses. Bell Labs’ modeling attributes nearly 99% of the total reliability gains in best case FMO to these kinds of operational improvements rather than to hardware or software quality alone, and this is a clear indicator that the automation layer is the game-changer in modern data center network monitoring.

The Business Case for Intelligent Operations

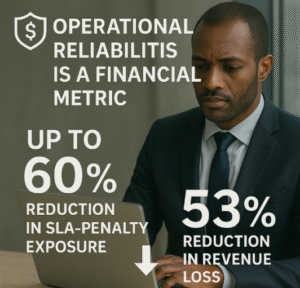

Operational reliability is no longer just a technical metric. In today’s digital economy, it’s also a financial one. Specifically, the report shows how improved uptime directly relates to cost avoidance, projecting up to 60% reduction in SLA-penalty exposure and 53% reduction in revenue loss for organizations transitioning from legacy PMO environments to SR Linux and EDA architectures. For a mid-sized enterprise, that can represent millions in annual savings, not to mention protection of brand reputation and customer trust.

Beyond pure economics, automating event handling also reshapes workforce activity. Engineers spend less time on repetitive fault isolation and more time on higher-value tasks like network optimization, capacity planning, and design. This evolution from reactive firefighting to proactive assurance aligns operational teams with business outcomes rather than raw uptime metrics.

Reliability as a Continuous Process

Network reliability isn’t a static state achieved after deployment; it’s an ongoing property maintained by automation. SR Linux and EDA ensures that every operational event, whether planned or unplanned, is an opportunity for the network to learn, adapt, and reinforce its intended state.

Through EHS, the fabric gains reflexes. Through EDA, it gains foresight. Together they create a data center network that monitors itself, corrects itself, and delivers measurable business value.

This blog post is number 3 in a series of 4. To see the other posts, visit: https://techstrong.it/category/sponsored/blc-report-blog-series/

You can also find out more about the study here and read the executive summary here.