When data-center operators talk about “reliability,” they often think of hardware redundancy or uptime. But in practice, the single greatest threat to uptime often originates long before a packet ever hits the wire. Errors during configuration and provisioning, or in other words, a mis-typed command, an unvalidated change, or an untested rollout, can introduce risk that lingers for months.

The recent Nokia Bell Labs Consulting data center fabric reliability study and its associated model shows how Nokia SR Linux and Event Driven Automation (EDA) platforms transform Day 0 and Day 1 activities from a manual, error-prone process into a predictable, self-verifying system that directly improves network availability and financial performance.

Closing the Reliability Gap Between Design and Deployment

In the design phase, architects model intent, including topology, policies, and expected behaviors. But the hand-off to configuration and provisioning has traditionally been where intent fractures. Configuration templates are interpreted manually, changes are applied device by device, and validation depends on operator diligence. The Bell Labs Consulting study distinguishes clearly between design (planning and modeling) and deployment (configuration and provisioning in production), emphasizing that reliability gains accelerate when automation ensures those two stages mirror each other precisely.

The EDA digital twin is a like-for-like environment that lets engineers design and validate configurations with the exact same intent inputs used in production. Within the digital twin, SR Linux’s modular, model-driven architecture interprets configuration data consistently across the stack, while EDA’s orchestration layer simulates the downstream effects of every change.

Because digital twin configurations are production-grade artifacts and not just prototypes, they can be deployed directly once they’re validated. This is a huge shift from manual and error-prone configuration and provisioning, eliminating the translation gap between the lab and the live network. In this way, engineers can minimize configuration drift and reduce deployment errors before they ever reach production.

Intent, Validation, and the Dry-Run Guarantee

EDA enforces reliability through a built-in dry-run validation before any configuration or provisioning action. Each proposed change is tested against the modeled intent and current state of the network. If the system detects inconsistencies, such as missing parameters, policy conflicts, or topology mismatches, the deployment stops automatically. In the study’s reliability model, this mechanism directly lowers configuration-related failure rates and mean-time-to-restore, contributing to the 95% reduction in downtime observed when moving from legacy operation (Present Mode of Operation or PMO) to SR Linux and EDA (best case Future Mode of Operation or FMO).

In business terms, this validation process represents real money. By ensuring accuracy before the first packet flows, enterprises avoid both unplanned outages and extended maintenance windows. The Bell Labs modeling showed that these improvements translate into up to 60% reduction in SLA penalty costs and as much as 53% reduction in revenue loss due to downtime. Simply put, fewer bad changes mean fewer late-night rollbacks, fewer service credits, and more consistent customer experiences.

Configuration Consistency Through Modularity

SR Linux’s architecture is intentionally disaggregated, meaning each component, from routing protocols to management agents, operates as an independent module communicating through an open API. During configuration, this allows the system to validate and apply updates incrementally rather than reloading entire subsystems. The result is non-disruptive provisioning even in large-scale fabrics. For network operators managing hundreds or thousands of leaf-spine nodes, this modularity drastically shortens the change window and reduces cumulative risk.

EDA extends this benefit through group-based upgrades, letting entire teams patch or reconfigure targeted nodes in parallel. In the reliability model, this feature reduced planned maintenance downtime while simultaneously improving protection-error probabilities, in effect, those moments when failovers don’t behave as expected because configurations are out of sync. By automating these repetitive tasks, network operators not only improve reliability but also free staff to focus on higher-value design and optimization work.

The Business Impact of Predictable Provisioning

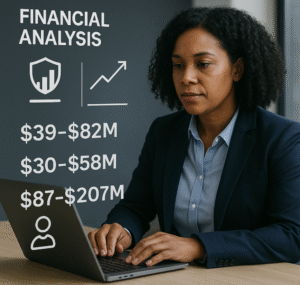

The financial analysis performed by Bell Labs Consulting reinforces that reliability improvements in configuration and provisioning have a measurable bottom-line impact. For a mid-sized enterprise migrating from a legacy PMO network to the SR Linux and EDA architecture, the report estimated annual savings of $39–$82 million (since financial impacts vary due to multiple factors the case study uses two scenarios, moderate and aggressive to reflect the range of savings) in reduced penalty costs, $30–58 million in avoided revenue loss, and $87–207 million in preserved brand value. While these numbers vary by scale and sector, they underscore a central truth that automation at the configuration layer isn’t just operational hygiene–it’s a strategic investment in uptime and reputation.

Technically, the model caps hardware and software improvement at 20%, attributing most of the gain, up to 90% of total reliability increase, to operational transformation, meaning automation, validation, and disciplined change control enabled by SR Linux and EDA. That finding reinforces a key insight that you can’t buy reliability purely through hardware refresh cycles; it must be engineered into how configurations are created, tested, and deployed.

Operational Transformation in Practice

Consider a large enterprise adopting SR Linux and EDA for its regional data centers. Previously, configuration rollouts required overnight maintenance windows, with engineers manually verifying each stage. Now, the provider uses the EDA digital twin to model configurations in advance, runs EDA dry-runs to validate dependencies, and executes deployments in parallel groups.

What once took twelve hours can be accomplished in two hours with full rollback assurance and telemetry feedback confirming compliance with the intended state. Over time, the organization reports both higher uptime and lower operational overhead, translating directly to improved customer SLAs and fewer emergency interventions.

Reliability as a Continuous Configuration Feedback Loop

In the FMO architecture, every provisioning event generates telemetry that feeds back into EDA’s analytics engine. If anomalies appear post-deployment, such as unexpected utilization patterns or policy violations, EDA triggers corrective workflows automatically. This closed-loop feedback ensures the network remains aligned with its intended configuration long after the initial rollout, turning provisioning into a continuous reliability process rather than a one-time activity.

Configuration and provisioning once represented the riskiest stage of the network lifecycle. The Nokia SR Linux and EDA solution changes that paradigm by embedding verification, automation, and modular control directly into the deployment process. The result is not only a dramatic reduction in human error and downtime but also a quantifiable financial advantage.

This blog post is number 2 in a series of 4. To see the other posts, visit: https://techstrong.it/category/sponsored/blc-report-blog-series/

You can also find out more about the study here and read the executive summary here.